I really like Zimbra, but it tends to use a ridiculous amount of CPU while just sitting there, which makes it a bad choice for someone like me who wants to run it with a few users at home as a virtual machine. As I stated growing the amount of virtual machines on my physical host at home, things started to get a little cramped. Zimbra just plain uses far more CPU "out of the box" than the other virtual machines (I've got enough RAM) and it was starting to become my bottleneck.

After installing Zimbra and just leaving it running, it used the better part of a processor core most of the time. That's not good if you've got a limited amount of hardware like I do. However, it wasn't too difficult of a process to get my Zimbra server to use almost no CPU most of the time. As a great side-effect to this project, I will be trying to bump down the amount of memory allocated to my Zimbra VM, but that wasn't the highest priority. I am running on the latest version of Zimbra (6.0.6 at the time of writing), but the tricks should apply to almost any version.

First, I started with disabling services that I really wasn't using. I'm not monitoring my Zimbra server using snmp, so snmp was a pretty easy one. My server isn't for an IT department or hosting service, so stats and logging history isn't overly important, so I also chose to disable logger and stats. To disable those, run:

zmprov ms mail.whatan00b.com -zimbraServiceEnabled snmp

zmprov ms mail.whatan00b.com -zimbraServiceEnabled logger

zmprov ms mail.whatan00b.com -zimbraServiceEnabled stats

Now, let's do a restart:

zmcontrol stop; zmcontrol start

This really only gave me gains in memory usage, but since I didn't need them turned on, that was ok. Another good candidate to disable would be antispam and antivirus, but I didn't want to turn off spam filtering on my system.

After disabling those extra services, I still was having CPU spikes every minute (which ultimately was what I was after). After doing a little digging, it turns out that Zimbra was calling zmmtaconfigctl which makes several zmprov calls. If you have been around Zimbra for any amount of time, you know that zmprov calls are expensive and time-consuming. It turns out that this script just scans to updated config to apply to the MTA. I really can't think of a reason that I would need this every minute. A quick Google search led to a forum post on how to increase the interval of which this script is called. It's defined in zmlocalconfig and 60 seconds is assumed if the value is not set. I chose to have it run every 2 hours (a fairly arbitrary decision):

zmlocalconfig -e zmmtaconfig_interval=7200

zmmtactl restart

That got my spikes down quite a bit, but I was still getting spikes of nearly around 20% every couple of minutes or so. While this wasn't all that detrimental, it would be good for my overall CPU usage to get rid of it. A quick look at the crontab for the zimbra user showed that the script /opt/zimbra/libexec/zmstatuslog. Apparently, this script checks the status of the Zimbra server and displays the status in the Admin Console. Since I rarely ever log into the admin console, I really don't need this to run very often. While there's really no use for me to have it running every two minutes, I did leave it set to run every hour:

0 * * /opt/zimbra/libexec/zmstatuslog

Now it's time to look at the good we've done.

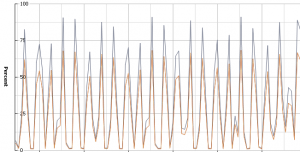

This is what we started with:

Obviously, quite a bit of CPU usage. You can see why I needed to do something to fit more VMs on this host.

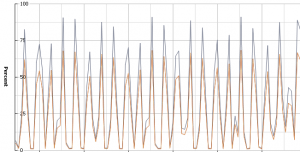

And now:

Looks great now!

There are a few extra cron jobs left in the zimbra user's crontab that really don't need to run for me such as the Dspam cron jobs, but those only run once a day. If you're really zealous, you can disable those as well, assuming you have Dspam disabled (the default).

Update: For anyone who is interested, I did the 6.0.6 -> 6.0.7 upgrade a few weekends ago and had my cron jobs reset. All the other changes stuck.