MySQL Memory Usage Growth After Lots of Table Creates/Drops

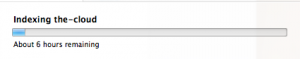

We've been having troubles for a while now with a cluster of MySQL nodes which consume a never-ending amount of memory over time until it eventually runs out, forcing us to restart MySQL if we catch it in time, or reboot the box if we don't. After doing tons of searches (and looking through countless bug reports), it seemed like it could have been a few memory leaks, fixed in late 5.1 or early 5.5 land. As this cluster was slightly older and not updated regularly, we pushed things over to new nodes and up to a modern version of 5.5. However, this didn't seem to solve the problem. Every week or so, we'd fail over to a secondary node and restart things. The biggest hint towards it being caused by all the creates is that the "leak" flowed through replication and the secondary nodes would run out within several hours of the primary.

As it turns out, the answer was quite simple. According to the Percona MySQL Performance Blog, the MySQL dictionary cache memory is allocated with each opened table, and never given back - without any limit. This isn't so bad I suppose, except if you're opening something like half a million tables per week and you just so happen to have a machine with a finite amount of memory. Then you might have a bit of trouble.

The fix is bad news if you're a MySQL community-build user. There's no way to limit it without switching to Percona Server (which I highly recommend over the stock MySQL builds, anyway). If you have installed Percona Server in favor of MySQL you can set innodb_dict_size_limit to limit the growth. This is '0' by default which mimics current stock MySQL. Note that this is a soft limit, so if you still have that many tables open you'll just need moar memories.

I should also note that while this setting says it's dynamic, it's a danger zone. I set this to a not-terribly-aggressive-yet-still-lower-than-current-dictionary-size value on the non-active node and everything came to a screeching halt with this in the error log:

--Thread 1264216384 has waited at dict0dict.c line 744 for 688.00 seconds the semaphore: